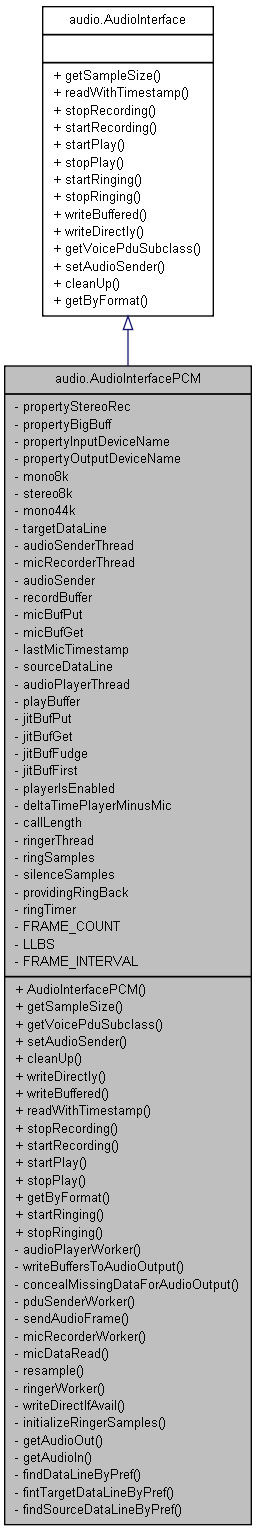

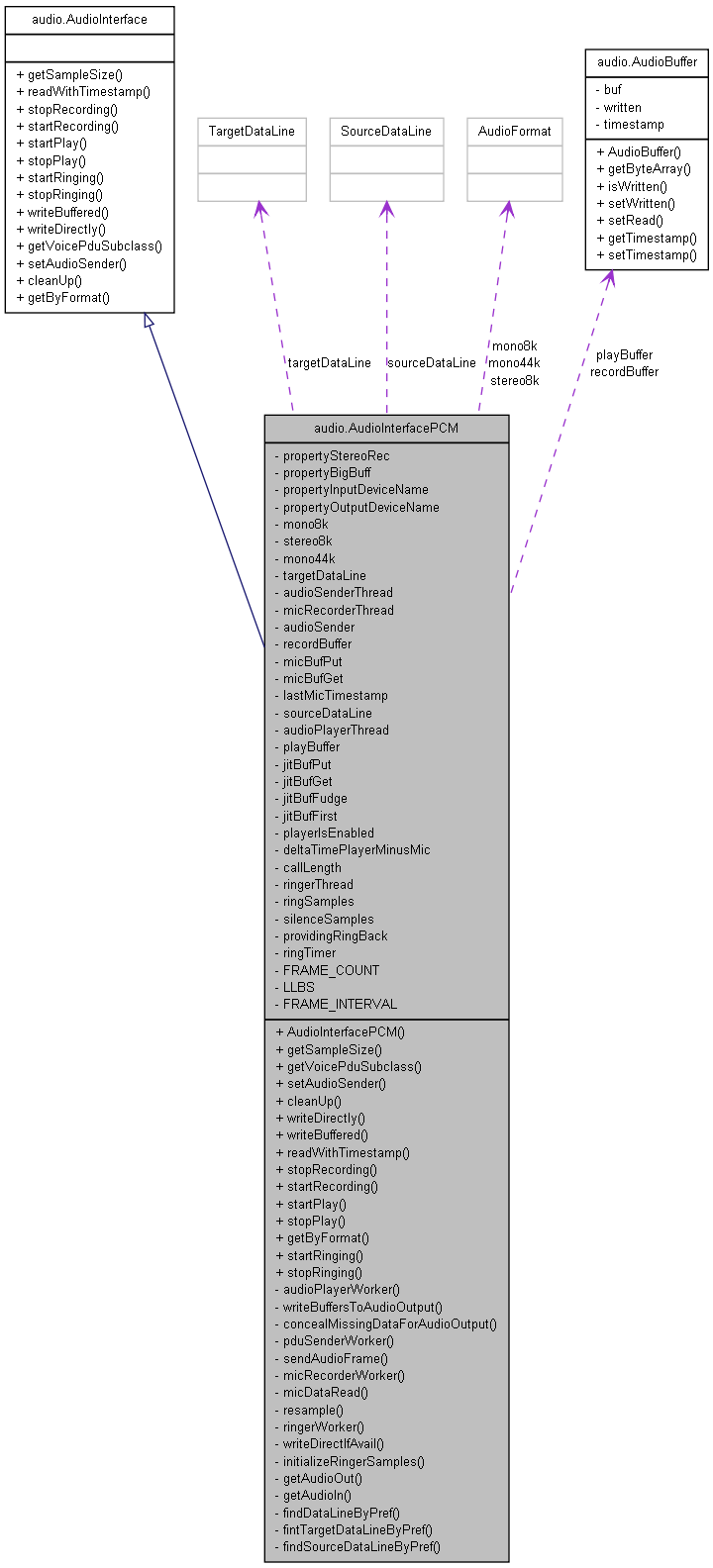

Implements the audio interface for 16-bit signed linear audio (PCM_SIGNED). More...

Public Member Functions | |

| AudioInterfacePCM () | |

| Constructor for the AudioInterfacePCM object. | |

| int | getSampleSize () |

| Returns preferred the minimum sample size for use in creating buffers etc. | |

| int | getVoicePduSubclass () |

| Returns our VoicePDU format. | |

| void | setAudioSender (AudioInterface.Packetizer as) |

| Sets the active audio sender for the recorder. | |

| void | cleanUp () |

| Stops threads and cleans-up the instance. | |

| void | writeDirectly (byte[] buff) |

| Writes directly to source line without buffering. | |

| void | writeBuffered (byte[] buff, long timestamp) throws IOException |

| Enqueue packet for playing into de-jitter buffer. | |

| long | readWithTimestamp (byte[] buff) throws IOException |

| Read from the Microphone, into the buffer provided, but only filling getSampSize() bytes. | |

| void | stopRecording () |

| Stops the audio recording worker thread. | |

| long | startRecording () |

| Start the audio recording worker thread. | |

| void | startPlay () |

| Starts the audio output worker thread. | |

| void | stopPlay () |

| Stops the audio output worker thread. | |

| AudioInterface | getByFormat (Integer format) |

| Gets audio interface by VoicePDU format. | |

| void | startRinging () |

| Starts ringing signal. | |

| void | stopRinging () |

| Stops ringing singnal. | |

Private Member Functions | |

| void | audioPlayerWorker () |

| Writes frames to audio output i.e. | |

| long | writeBuffersToAudioOutput () |

| Writes de-jittered audio frames to audio output. | |

| void | concealMissingDataForAudioOutput (int n) |

| Conceals missing data in the audio output buffer by averaging from samples taken from the the previous and next buffer. | |

| void | pduSenderWorker () |

| Sends audio frames to UDP channel at regular intervals (ticks) | |

| void | sendAudioFrame (long set) |

| Called every FRAMEINTERVAL ms to send audio frame. | |

| void | micRecorderWorker () |

| Records audio samples from the microphone. | |

| void | micDataRead () |

| Called from micRecorder to record audio samples from microphone. | |

| void | resample (byte[] src, byte[] dest) |

| Simple PCM down sampler. | |

| void | ringerWorker () |

| Writes ring signal samples to audio output. | |

| long | writeDirectIfAvail (byte[] samples) |

| Writes audio samples to audio output directly (without using jitter buffer). | |

| void | initializeRingerSamples () |

| Initializes ringer samples (ring singnal and silecce) samples. | |

| boolean | getAudioOut () |

| Get audio output. | |

| boolean | getAudioIn () |

| Returns audio input (target data line) | |

| DataLine | findDataLineByPref (String pref, AudioFormat af, String name, int sbuffsz, Class<?> lineClass, String debugInfo) |

| Searches for data line of either sort (source/targe) based on the pref string. | |

| TargetDataLine | fintTargetDataLineByPref (String pref, AudioFormat af, String name, int sbuffsz) |

| Searches for target data line according to preferences. | |

| SourceDataLine | findSourceDataLineByPref (String pref, AudioFormat af, String name, int sbuffsz) |

| Searches for source data line according to preferences. | |

Private Attributes | |

| boolean | propertyStereoRec = false |

| Stereo recording. | |

| boolean | propertyBigBuff = false |

| Big buffers. | |

| String | propertyInputDeviceName = null |

| Input device name. | |

| String | propertyOutputDeviceName = null |

| Output device name. | |

| AudioFormat | mono8k |

| AudioFormat | stereo8k |

| AudioFormat | mono44k |

| TargetDataLine | targetDataLine = null |

| volatile Thread | audioSenderThread = null |

| volatile Thread | micRecorderThread = null |

| volatile Packetizer | audioSender = null |

| AudioBuffer[] | recordBuffer = new AudioBuffer[ FRAME_COUNT ] |

| int | micBufPut = 0 |

| int | micBufGet = 0 |

| long | lastMicTimestamp = 0 |

| SourceDataLine | sourceDataLine = null |

| volatile Thread | audioPlayerThread = null |

| AudioBuffer[] | playBuffer = new AudioBuffer[ FRAME_COUNT + FRAME_COUNT ] |

| int | jitBufPut = 0 |

| int | jitBufGet = 0 |

| long | jitBufFudge = 0 |

| boolean | jitBufFirst = true |

| boolean | playerIsEnabled = false |

| long | deltaTimePlayerMinusMic = 0 |

| long | callLength = 0 |

| Measured call length in milliseconds. | |

| volatile Thread | ringerThread = null |

| byte[] | ringSamples = null |

| byte[] | silenceSamples = null |

| boolean | providingRingBack = false |

| long | ringTimer = -1 |

Static Private Attributes | |

| static final int | FRAME_COUNT = 10 |

| Audio buffering depth in number of frames. | |

| static final int | LLBS = 6 |

| Low-level water mark used for de-jittering. | |

| static final int | FRAME_INTERVAL = 20 |

| Frame interval in milliseconds. | |

Detailed Description

Implements the audio interface for 16-bit signed linear audio (PCM_SIGNED).

It also provides support for CODECs that can convert to and from Signed LIN16.

Definition at line 27 of file AudioInterfacePCM.java.

Constructor & Destructor Documentation

| audio.AudioInterfacePCM.AudioInterfacePCM | ( | ) |

Constructor for the AudioInterfacePCM object.

Definition at line 112 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.audioPlayerThread, audio.AudioInterfacePCM.audioPlayerWorker(), audio.AudioInterfacePCM.audioSenderThread, audio.AudioInterfacePCM.getAudioIn(), audio.AudioInterfacePCM.getAudioOut(), audio.AudioInterfacePCM.getSampleSize(), audio.AudioInterfacePCM.initializeRingerSamples(), audio.AudioInterfacePCM.mono44k, audio.AudioInterfacePCM.mono8k, audio.AudioInterfacePCM.pduSenderWorker(), audio.AudioInterfacePCM.ringerThread, audio.AudioInterfacePCM.ringerWorker(), audio.AudioInterfacePCM.sourceDataLine, audio.AudioInterfacePCM.stereo8k, and audio.AudioInterfacePCM.targetDataLine.

{

this.mono8k = new AudioFormat(

AudioFormat.Encoding.PCM_SIGNED,

8000f, 16, 1, 2, 8000f, true );

this.stereo8k = new AudioFormat(

AudioFormat.Encoding.PCM_SIGNED,

8000f, 16, 2, 4, 8000f, true );

this.mono44k = new AudioFormat(

AudioFormat.Encoding.PCM_SIGNED,

44100f, 16, 1, 2, 44100f, true );

initializeRingerSamples ();

//////////////////////////////////////////////////////////////////////////////////

/* Initializes source and target data lines used by the instance.

*/

getAudioIn ();

getAudioOut ();

//////////////////////////////////////////////////////////////////////////////////

if ( this.targetDataLine != null )

{

Runnable thread = new Runnable() {

public void run () {

pduSenderWorker ();

}

};

this.audioSenderThread = new Thread( thread, "Tick-send" );

this.audioSenderThread.setDaemon( true );

this.audioSenderThread.setPriority( Thread.MAX_PRIORITY - 1 );

this.audioSenderThread.start();

}

//////////////////////////////////////////////////////////////////////////////////

if ( this.sourceDataLine != null )

{

Runnable thread = new Runnable () {

public void run () {

audioPlayerWorker ();

}

};

this.audioPlayerThread = new Thread( thread, "Tick-play" );

this.audioPlayerThread.setDaemon( true );

this.audioPlayerThread.setPriority( Thread.MAX_PRIORITY );

this.audioPlayerThread.start();

}

//////////////////////////////////////////////////////////////////////////////////

if ( this.sourceDataLine != null )

{

Runnable thread = new Runnable () {

public void run () {

ringerWorker ();

}

};

this.ringerThread = new Thread( thread, "Ringer" );

this.ringerThread.setDaemon( true );

this.ringerThread.setPriority( Thread.MIN_PRIORITY );

this.ringerThread.start ();

}

//////////////////////////////////////////////////////////////////////////////////

if ( this.sourceDataLine == null ) {

Log.attn( "Failed to open audio output device (speaker or similar)" );

}

if ( this.targetDataLine == null ) {

Log.attn( "Failed to open audio capture device (microphone or similar)" );

}

Log.trace( "Created 8kHz 16-bit PCM audio interface; Sample size = "

+ this.getSampleSize () + " octets" );

}

Member Function Documentation

| void audio.AudioInterfacePCM.audioPlayerWorker | ( | ) | [private] |

Writes frames to audio output i.e.

source data line

Definition at line 308 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.audioPlayerThread, audio.AudioInterfacePCM.FRAME_INTERVAL, audio.AudioInterfacePCM.sourceDataLine, and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM().

{

Log.trace( "Thread started" );

while( this.audioPlayerThread != null )

{

if ( this.sourceDataLine == null ) {

break;

}

long next = this.writeBuffersToAudioOutput ();

if ( next < 1 ) {

next = FRAME_INTERVAL;

}

try {

Thread.sleep( next );

} catch( Throwable e ) {

/* ignored */

}

}

Log.trace( "Thread completed" );

this.audioPlayerThread = null;

}

| void audio.AudioInterfacePCM.cleanUp | ( | ) | [virtual] |

Stops threads and cleans-up the instance.

Implements audio.AudioInterface.

Definition at line 236 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.audioPlayerThread, audio.AudioInterfacePCM.audioSenderThread, audio.AudioInterfacePCM.micRecorderThread, audio.AudioInterfacePCM.ringerThread, audio.AudioInterfacePCM.sourceDataLine, and audio.AudioInterfacePCM.targetDataLine.

Referenced by audio.AbstractCODEC.cleanUp().

{

/* Signal all worker threads to quit

*/

Thread spkout = this.audioPlayerThread;

Thread ringer = this.ringerThread;

Thread micin = this.micRecorderThread;

Thread sender = this.audioSenderThread;

this.audioPlayerThread = null;

this.ringerThread = null;

this.micRecorderThread = null;

this.audioSenderThread = null;

/* Wait for worker threads to complete

*/

if ( spkout != null ) {

try {

spkout.interrupt ();

spkout.join ();

} catch ( InterruptedException e ) {

/* ignored */

}

}

if ( ringer != null ) {

try {

ringer.interrupt ();

ringer.join ();

} catch ( InterruptedException e ) {

/* ignored */

}

}

if ( micin != null ) {

try {

micin.interrupt ();

micin.join ();

} catch ( InterruptedException e ) {

/* ignored */

}

}

if ( sender != null ) {

try {

sender.interrupt ();

sender.join ();

} catch ( InterruptedException e ) {

/* ignored */

}

}

/* Be nice and close audio data lines

*/

if ( this.sourceDataLine != null )

{

this.sourceDataLine.close ();

this.sourceDataLine = null;

}

if ( this.targetDataLine != null )

{

this.targetDataLine.close ();

this.targetDataLine = null;

}

}

| void audio.AudioInterfacePCM.concealMissingDataForAudioOutput | ( | int | n ) | [private] |

Conceals missing data in the audio output buffer by averaging from samples taken from the the previous and next buffer.

Definition at line 490 of file AudioInterfacePCM.java.

References audio.AudioBuffer.getByteArray(), and audio.AudioInterfacePCM.playBuffer.

Referenced by audio.AudioInterfacePCM.writeBuffersToAudioOutput().

{

byte[] target = this.playBuffer[n % this.playBuffer.length].getByteArray();

byte[] prev = this.playBuffer[ (n - 1) % this.playBuffer.length].getByteArray();

byte[] next = this.playBuffer[ (n + 1) % this.playBuffer.length].getByteArray();

/* Creates a packet by averaging the corresponding bytes

* in the surrounding packets hoping that new packet will sound better

* than silence.

* TODO fix for 16-bit samples

*/

for ( int i = 0; i < target.length; ++i )

{

target[i] = (byte) ( 0xFF & ( (prev[ i ] >> 1 ) + ( next[i] >> 1 ) ) );

}

}

| DataLine audio.AudioInterfacePCM.findDataLineByPref | ( | String | pref, |

| AudioFormat | af, | ||

| String | name, | ||

| int | sbuffsz, | ||

| Class<?> | lineClass, | ||

| String | debugInfo | ||

| ) | [private] |

Searches for data line of either sort (source/targe) based on the pref string.

Uses type to determine the sort ie Target or Source. debugInfo parameter is only used in debug printouts to set the context.

Definition at line 1035 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.findSourceDataLineByPref(), and audio.AudioInterfacePCM.fintTargetDataLineByPref().

{

DataLine line = null;

DataLine.Info info = new DataLine.Info(lineClass, af);

try

{

if ( pref == null )

{

line = (DataLine) AudioSystem.getLine( info );

}

else

{

Mixer.Info[] mixes = AudioSystem.getMixerInfo();

for ( int i = 0; i < mixes.length; ++i )

{

Mixer.Info mixi = mixes[i];

String mixup = mixi.getName().trim();

Log.audio( "Mix " + i + " " + mixup );

if ( mixup.equals( pref ) )

{

Log.audio( "Found name match for prefered input mixer" );

Mixer preferedMixer = AudioSystem.getMixer( mixi );

if ( preferedMixer.isLineSupported( info ) )

{

line = (DataLine) preferedMixer.getLine( info );

Log.audio( "Got " + debugInfo + " line" );

break;

}

else

{

Log.audio( debugInfo + " format not supported" );

}

}

}

}

}

catch( Exception e )

{

Log.warn( "Unable to get a " + debugInfo + " line of type: " + name );

line = null;

}

return line;

}

| SourceDataLine audio.AudioInterfacePCM.findSourceDataLineByPref | ( | String | pref, |

| AudioFormat | af, | ||

| String | name, | ||

| int | sbuffsz | ||

| ) | [private] |

Searches for source data line according to preferences.

Definition at line 1115 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.findDataLineByPref().

Referenced by audio.AudioInterfacePCM.getAudioOut().

{

String debtxt = "play";

SourceDataLine line = (SourceDataLine) findDataLineByPref( pref, af, name,

sbuffsz, SourceDataLine.class, debtxt );

if ( line != null )

{

try

{

line.open( af, sbuffsz );

Log.audio( "Got a " + debtxt + " line of type: " + name

+ ", buffer size = " + line.getBufferSize () );

}

catch( LineUnavailableException e )

{

Log.warn( "Unable to get a " + debtxt + " line of type: " + name );

line = null;

}

}

return line;

}

| TargetDataLine audio.AudioInterfacePCM.fintTargetDataLineByPref | ( | String | pref, |

| AudioFormat | af, | ||

| String | name, | ||

| int | sbuffsz | ||

| ) | [private] |

Searches for target data line according to preferences.

Definition at line 1086 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.findDataLineByPref().

Referenced by audio.AudioInterfacePCM.getAudioIn().

{

String debugInfo = "recording";

TargetDataLine line = (TargetDataLine) findDataLineByPref(pref, af, name,

sbuffsz, TargetDataLine.class, debugInfo );

if ( line != null )

{

try

{

line.open( af, sbuffsz );

Log.audio( "Got a " + debugInfo + " line of type: " + name

+ ", buffer size = " + line.getBufferSize () );

}

catch( LineUnavailableException e )

{

Log.warn( "Unable to get a " + debugInfo + " line of type: " + name );

line = null;

}

}

return line;

}

| boolean audio.AudioInterfacePCM.getAudioIn | ( | ) | [private] |

Returns audio input (target data line)

Definition at line 963 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.fintTargetDataLineByPref(), audio.AudioInterfacePCM.FRAME_INTERVAL, audio.AudioInterfacePCM.LLBS, audio.AudioInterfacePCM.mono44k, audio.AudioInterfacePCM.mono8k, audio.AudioInterfacePCM.propertyBigBuff, audio.AudioInterfacePCM.propertyInputDeviceName, audio.AudioInterfacePCM.propertyStereoRec, audio.AudioInterfacePCM.recordBuffer, audio.AudioInterfacePCM.stereo8k, and audio.AudioInterfacePCM.targetDataLine.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM().

{

this.targetDataLine = null;

boolean succeded = false;

/* Make a list of formats we can live with

*/

String names[] = { "mono8k", "mono44k" };

AudioFormat[] afsteps = { this.mono8k, this.mono44k };

if ( propertyStereoRec ) {

names[0] = "stereo8k";

afsteps[0] = this.stereo8k;

}

int[] smallbuff = {

(int) Math.round( LLBS * afsteps[0].getFrameSize() * afsteps[0].getFrameRate()

* FRAME_INTERVAL / 1000.0 ),

(int) Math.round( LLBS * afsteps[1].getFrameSize() * afsteps[1].getFrameRate()

* FRAME_INTERVAL / 1000.0 )

};

/* If LLBS > 4 then these can be the same ( Should tweak based on LLB really.)

*/

int[] bigbuff = smallbuff;

/* choose one based on audio properties

*/

int[] buff = propertyBigBuff ? bigbuff : smallbuff;

/* now try and find a device that will do it - and live up to the preferences

*/

String pref = propertyInputDeviceName;

int fno = 0;

this.targetDataLine = null;

for ( ; fno < afsteps.length && this.targetDataLine == null; ++fno )

{

this.targetDataLine = fintTargetDataLineByPref(pref,

afsteps[fno], names[fno], buff[fno] );

}

if ( this.targetDataLine != null )

{

Log.audio( "TargetDataLine =" + this.targetDataLine + ", fno = " + fno );

AudioFormat af = this.targetDataLine.getFormat ();

/* now allocate some buffer space in the raw format

*/

int inputBufferSize = (int) ( af.getFrameRate() * af.getFrameSize() / 50.0 );

Log.trace( "Input Buffer Size = " + inputBufferSize );

for ( int i = 0; i < this.recordBuffer.length; ++i ) {

this.recordBuffer[i] = new AudioBuffer( inputBufferSize );

}

succeded = true;

}

else

{

Log.warn( "No audio input device available" );

}

return succeded;

}

| boolean audio.AudioInterfacePCM.getAudioOut | ( | ) | [private] |

Get audio output.

Initializes source data line.

Definition at line 912 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.findSourceDataLineByPref(), audio.AudioInterfacePCM.FRAME_INTERVAL, audio.AudioInterfacePCM.LLBS, audio.AudioInterfacePCM.mono8k, audio.AudioInterfacePCM.playBuffer, audio.AudioInterfacePCM.propertyBigBuff, audio.AudioInterfacePCM.propertyOutputDeviceName, audio.AudioInterfacePCM.propertyStereoRec, audio.AudioInterfacePCM.sourceDataLine, and audio.AudioInterfacePCM.stereo8k.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM().

{

this.sourceDataLine = null;

boolean succeded = false;

AudioFormat af;

String name;

if ( propertyStereoRec ) {

af = this.stereo8k;

name = "stereo8k";

} else {

af = this.mono8k;

name = "mono8k";

}

int buffsz = (int) Math.round( LLBS * af.getFrameSize() * af.getFrameRate() *

FRAME_INTERVAL / 1000.0 );

if ( propertyBigBuff ) {

buffsz *= 2.5;

}

/* We want to do tricky stuff on the 8k mono stream before

* play back, so we accept no other sort of line.

*/

String pref = propertyOutputDeviceName;

SourceDataLine sdl = findSourceDataLineByPref( pref, af, name, buffsz );

if ( sdl != null )

{

int outputBufferSize = (int) ( af.getFrameRate() * af.getFrameSize() / 50.0 );

Log.trace( "Output Buffer Size = " + outputBufferSize );

for ( int i = 0; i < this.playBuffer.length; ++i ) {

this.playBuffer[i] = new AudioBuffer( outputBufferSize );

}

this.sourceDataLine = sdl;

succeded = true;

}

else

{

Log.warn( "No audio output device available" );

}

return succeded;

}

| AudioInterface audio.AudioInterfacePCM.getByFormat | ( | Integer | format ) | [virtual] |

Gets audio interface by VoicePDU format.

- Returns:

- AudioInterfacePCM

Implements audio.AudioInterface.

Definition at line 1270 of file AudioInterfacePCM.java.

References protocol.VoicePDU.ALAW, protocol.VoicePDU.LIN16, and protocol.VoicePDU.ULAW.

Referenced by audio.AbstractCODEC.getByFormat().

{

AudioInterface ret = null;

switch( format.intValue () )

{

case VoicePDU.ALAW:

ret = new AudioCodecAlaw(this);

break;

case VoicePDU.ULAW:

ret = new AudioCodecUlaw(this);

break;

case VoicePDU.LIN16:

ret = this;

break;

default:

Log.warn( "Invalid format for Audio " + format.intValue () );

Log.warn( "Forced uLaw " );

ret = new AudioCodecUlaw(this);

break;

}

Log.audio( "Using audio Interface of type : " + ret.getClass().getName() );

return ret;

}

| int audio.AudioInterfacePCM.getSampleSize | ( | ) | [virtual] |

Returns preferred the minimum sample size for use in creating buffers etc.

Implements audio.AudioInterface.

Definition at line 206 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.FRAME_INTERVAL, and audio.AudioInterfacePCM.mono8k.

Referenced by audio.AudioCodecAlaw.AudioCodecAlaw(), audio.AudioCodecUlaw.AudioCodecUlaw(), audio.AudioInterfacePCM.AudioInterfacePCM(), and audio.AudioInterfacePCM.initializeRingerSamples().

{

AudioFormat af = this.mono8k;

return (int) ( af.getFrameRate() * af.getFrameSize() * FRAME_INTERVAL / 1000.0 );

}

| int audio.AudioInterfacePCM.getVoicePduSubclass | ( | ) | [virtual] |

Returns our VoicePDU format.

Implements audio.AudioInterface.

Definition at line 216 of file AudioInterfacePCM.java.

{

return protocol.VoicePDU.LIN16;

}

| void audio.AudioInterfacePCM.initializeRingerSamples | ( | ) | [private] |

Initializes ringer samples (ring singnal and silecce) samples.

Definition at line 879 of file AudioInterfacePCM.java.

References utils.OctetBuffer.allocate(), audio.AudioInterfacePCM.getSampleSize(), utils.OctetBuffer.getStore(), utils.OctetBuffer.putShort(), audio.AudioInterfacePCM.ringSamples, and audio.AudioInterfacePCM.silenceSamples.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM().

{

/* First generate silence

*/

int numOfSamples = this.getSampleSize ();

this.silenceSamples = new byte[ numOfSamples ];

/* Now generate ringing tone as two superimposed frequencies.

*/

double freq1 = 25.0 / 8000;

double freq2 = 420.0 / 8000;

OctetBuffer rbb = OctetBuffer.allocate( numOfSamples );

for ( int i = 0; i < 160; ++i )

{

short s = (short) ( Short.MAX_VALUE

* Math.sin( 2.0 * Math.PI * freq1 * i )

* Math.sin( 4.0 * Math.PI * freq2 * i )

/ 4.0 /* signal level ~= -12 dB */

);

rbb.putShort( s );

}

this.ringSamples = rbb.getStore ();

}

| void audio.AudioInterfacePCM.micDataRead | ( | ) | [private] |

Called from micRecorder to record audio samples from microphone.

Blocks as needed.

Definition at line 673 of file AudioInterfacePCM.java.

References audio.AudioBuffer.getByteArray(), audio.AudioBuffer.isWritten(), audio.AudioInterfacePCM.lastMicTimestamp, audio.AudioInterfacePCM.micBufPut, audio.AudioInterfacePCM.recordBuffer, audio.AudioBuffer.setTimestamp(), audio.AudioBuffer.setWritten(), and audio.AudioInterfacePCM.targetDataLine.

Referenced by audio.AudioInterfacePCM.micRecorderWorker().

{

try

{

int fresh = this.micBufPut % this.recordBuffer.length;

AudioBuffer ab = this.recordBuffer[ fresh ];

byte[] buff = ab.getByteArray ();

this.targetDataLine.read( buff, 0, buff.length );

long stamp = this.targetDataLine.getMicrosecondPosition () / 1000;

if ( stamp >= this.lastMicTimestamp )

{

if ( ab.isWritten () ) {

// Overrun audio data: stamp + "/" + got

}

ab.setTimestamp( stamp );

ab.setWritten (); // should test for overrun ???

/* Out audio data into buffer:

* fresh + " " + ab.getTimestamp() + "/" + this.micBufPut

*/

++this.micBufPut;

}

else // Seen at second and subsequent activations, garbage data

{

/* Drop audio data */

}

this.lastMicTimestamp = stamp;

}

catch( Exception e )

{

/* ignored */

}

}

| void audio.AudioInterfacePCM.micRecorderWorker | ( | ) | [private] |

Records audio samples from the microphone.

Definition at line 651 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.micDataRead(), audio.AudioInterfacePCM.micRecorderThread, and audio.AudioInterfacePCM.targetDataLine.

Referenced by audio.AudioInterfacePCM.startRecording().

{

Log.trace( "Thread started" );

while( this.micRecorderThread != null )

{

if ( this.targetDataLine == null ) {

break;

}

micDataRead ();

}

Log.trace( "Thread stopped" );

this.micRecorderThread = null;

}

| void audio.AudioInterfacePCM.pduSenderWorker | ( | ) | [private] |

Sends audio frames to UDP channel at regular intervals (ticks)

Definition at line 561 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.audioSenderThread, audio.AudioInterfacePCM.FRAME_INTERVAL, audio.AudioInterfacePCM.sendAudioFrame(), and audio.AudioInterfacePCM.targetDataLine.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM().

{

Log.trace( "Thread started" );

long set = 0;

long last, point = 0;

boolean audioTime = false;

while( this.audioSenderThread != null )

{

if ( this.targetDataLine == null ) {

break;

}

/* This should be current time: interval += FRAME_INTERVAL

*/

point += FRAME_INTERVAL;

/* Delta time

*/

long delta = point - set + FRAME_INTERVAL;

if ( this.targetDataLine.isActive () )

{

if ( ! audioTime ) // Take care of "discontinuous time"

{

audioTime = true;

set = this.targetDataLine.getMicrosecondPosition() / 1000;

last = point = set;

}

}

else

{

point = 0;

delta = FRAME_INTERVAL; // We are live before TDL

set = System.currentTimeMillis (); // For ring cadence

audioTime = false;

}

sendAudioFrame( set );

// If we are late, set is larger than last so we sleep less

// If we are early, set is smaller than last and we sleep longer

//

if ( delta > 1 ) // Only sleep if it is worth it...

{

try {

Thread.sleep( delta );

} catch( InterruptedException e ) {

/* ignored */

}

}

last = set;

if ( audioTime ) {

set = this.targetDataLine.getMicrosecondPosition() / 1000;

}

if ( point > 0 ) {

Log.audio( "Ticker: slept " + delta + " from " + last + ", now " + set );

}

}

Log.trace( "Thread completed" );

this.audioSenderThread = null;

}

| long audio.AudioInterfacePCM.readWithTimestamp | ( | byte[] | buff ) | throws IOException [virtual] |

Read from the Microphone, into the buffer provided, but only filling getSampSize() bytes.

Returns the timestamp of the sample from the audio clock.

- Parameters:

-

buff audio samples

- Returns:

- the timestamp of the sample from the audio clock.

- Exceptions:

-

IOException Description of Exception

Implements audio.AudioInterface.

Definition at line 722 of file AudioInterfacePCM.java.

References audio.AudioBuffer.getByteArray(), audio.AudioBuffer.getTimestamp(), audio.AudioBuffer.isWritten(), audio.AudioInterfacePCM.micBufGet, audio.AudioInterfacePCM.micBufPut, audio.AudioInterfacePCM.recordBuffer, audio.AudioInterfacePCM.resample(), audio.AudioBuffer.setRead(), and audio.AudioInterfacePCM.silenceSamples.

Referenced by audio.AbstractCODEC.readWithTimestamp().

{

int micnext = this.micBufGet % this.recordBuffer.length;

int buffCap = (this.micBufPut - this.micBufGet ) % this.recordBuffer.length;

long timestamp = 0;

Log.audio( "Getting audio data from buffer " + micnext + "/" + buffCap );

AudioBuffer ab = this.recordBuffer[ micnext ];

if ( ab.isWritten ()

&& ( this.micBufGet > 0 || buffCap >= this.recordBuffer.length / 2 ) )

{

timestamp = ab.getTimestamp ();

resample( ab.getByteArray(), buff );

ab.setRead ();

++this.micBufGet;

}

else

{

System.arraycopy( this.silenceSamples, 0, buff, 0, buff.length );

Log.audio( "Sending silence" );

timestamp = ab.getTimestamp (); // or should we warn them ??

}

return timestamp;

}

| void audio.AudioInterfacePCM.resample | ( | byte[] | src, |

| byte[] | dest | ||

| ) | [private] |

Simple PCM down sampler.

- Parameters:

-

src source buffer with audio samples dest destination buffer with audio samples

Definition at line 756 of file AudioInterfacePCM.java.

References utils.OctetBuffer.getShort(), utils.OctetBuffer.putShort(), and utils.OctetBuffer.wrap().

Referenced by audio.AudioInterfacePCM.readWithTimestamp().

{

if ( src.length == dest.length )

{

/* Nothing to down sample; copy samples as-is to destination

*/

System.arraycopy( src, 0, dest, 0, src.length );

return;

}

else if ( src.length / 2 == dest.length )

{

/* Source is stereo, send the left channel

*/

for ( int i = 0; i < dest.length / 2; i++ )

{

dest[i * 2] = src[i * 4];

dest[i * 2 + 1] = src[i * 4 + 1];

}

return;

}

/* Now real work. We assume that it is 44k stereo 16-bit and will down-sample

* it to 8k (but not very clever: no anti-aliasing etc....).

*/

OctetBuffer srcBuffer = OctetBuffer.wrap( src );

OctetBuffer destBuffer = OctetBuffer.wrap( dest );

/* Iterate over the values we have, add them to the target bucket they fall into

* and count the drops....

*/

int drange = dest.length / 2;

double v[] = new double[ drange ];

double w[] = new double[ drange ];

double frequencyRatio = 8000.0 / 44100.0;

int top = src.length / 2;

for ( int eo = 0; eo < top; ++eo )

{

int samp = (int) Math.floor( eo * frequencyRatio );

if ( samp >= drange ) {

samp = drange - 1;

}

v[ samp ] += srcBuffer.getShort( eo * 2 );

w[ samp ]++;

}

/* Now re-weight the samples to ensure no volume quirks and move to short

*/

short vw = 0;

for ( int ei = 0; ei < drange; ++ei )

{

if ( w[ei] != 0 ) {

vw = (short) ( v[ei] / w[ei] );

}

destBuffer.putShort( ei * 2, vw );

}

}

| void audio.AudioInterfacePCM.ringerWorker | ( | ) | [private] |

Writes ring signal samples to audio output.

Definition at line 819 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.FRAME_INTERVAL, audio.AudioInterfacePCM.providingRingBack, audio.AudioInterfacePCM.ringerThread, audio.AudioInterfacePCM.ringSamples, audio.AudioInterfacePCM.ringTimer, audio.AudioInterfacePCM.silenceSamples, audio.AudioInterfacePCM.sourceDataLine, and audio.AudioInterfacePCM.writeDirectIfAvail().

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM().

{

Log.trace( "Thread started" );

while( this.ringerThread != null )

{

if ( this.sourceDataLine == null ) {

break;

}

long nap = 100; // default sleep in millis when idle

if ( this.providingRingBack )

{

nap = 0;

while( nap < FRAME_INTERVAL )

{

boolean inRing = ( ( this.ringTimer++ % 120 ) < 40 );

if ( inRing ) {

nap = this.writeDirectIfAvail( this.ringSamples );

} else {

nap = this.writeDirectIfAvail( this.silenceSamples );

}

}

}

try {

Thread.sleep( nap );

} catch( InterruptedException ex ) {

/* ignored */

}

}

Log.trace( "Thread completed" );

this.ringerThread = null;

}

| void audio.AudioInterfacePCM.sendAudioFrame | ( | long | set ) | [private] |

Called every FRAMEINTERVAL ms to send audio frame.

Definition at line 633 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.audioSender, and audio.AudioInterface.Packetizer.send().

Referenced by audio.AudioInterfacePCM.pduSenderWorker().

{

if ( this.audioSender == null ) {

return;

}

try {

this.audioSender.send ();

} catch( IOException e ) {

Log.exception( Log.WARN, e );

}

}

| void audio.AudioInterfacePCM.setAudioSender | ( | AudioInterface.Packetizer | as ) | [virtual] |

Sets the active audio sender for the recorder.

Implements audio.AudioInterface.

Definition at line 225 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.audioSender.

Referenced by audio.AbstractCODEC.setAudioSender().

{

this.audioSender = as;

}

| void audio.AudioInterfacePCM.startPlay | ( | ) | [virtual] |

Starts the audio output worker thread.

Implements audio.AudioInterface.

Definition at line 1209 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.callLength, audio.AudioInterfacePCM.jitBufFudge, audio.AudioInterfacePCM.jitBufGet, audio.AudioInterfacePCM.jitBufPut, audio.AudioInterfacePCM.playerIsEnabled, and audio.AudioInterfacePCM.sourceDataLine.

Referenced by audio.AbstractCODEC.startPlay(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

{

if ( this.sourceDataLine == null ) {

return;

}

Log.audio( "Started playing" );

/* Reset the dejitter buffer

*/

this.jitBufPut = 0;

this.jitBufGet = 0;

this.jitBufFudge = 0;

this.callLength = 0;

this.sourceDataLine.flush ();

this.sourceDataLine.start ();

this.playerIsEnabled = true;

}

| long audio.AudioInterfacePCM.startRecording | ( | ) | [virtual] |

Start the audio recording worker thread.

Implements audio.AudioInterface.

Definition at line 1163 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.lastMicTimestamp, audio.AudioInterfacePCM.micBufGet, audio.AudioInterfacePCM.micBufPut, audio.AudioInterfacePCM.micRecorderThread, audio.AudioInterfacePCM.micRecorderWorker(), audio.AudioInterfacePCM.recordBuffer, audio.AudioBuffer.setRead(), and audio.AudioInterfacePCM.targetDataLine.

Referenced by audio.AbstractCODEC.startRecording().

{

if ( this.targetDataLine == null ) {

return 0;

}

Log.audio( "Started recording" );

if ( this.targetDataLine.available() > 0 )

{

Log.audio( "Flushed recorded data" );

this.targetDataLine.flush ();

this.lastMicTimestamp = Long.MAX_VALUE; // Get rid of spurious samples

}

else

{

this.lastMicTimestamp = 0;

}

this.targetDataLine.start ();

/* Clean receive buffers pointers

*/

this.micBufPut = this.micBufGet = 0;

for ( int i = 0; i < this.recordBuffer.length; ++i ) {

this.recordBuffer[i].setRead ();

}

Runnable thread = new Runnable() {

public void run () {

micRecorderWorker ();

}

};

this.micRecorderThread = new Thread( thread, "Tick-rec" );

this.micRecorderThread.setDaemon( true );

this.micRecorderThread.setPriority( Thread.MAX_PRIORITY - 1 );

this.micRecorderThread.start ();

return this.targetDataLine.getMicrosecondPosition() / 1000;

}

| void audio.AudioInterfacePCM.startRinging | ( | ) | [virtual] |

Starts ringing signal.

Implements audio.AudioInterface.

Definition at line 1300 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.providingRingBack, and audio.AudioInterfacePCM.sourceDataLine.

Referenced by audio.AbstractCODEC.startRinging().

{

if ( this.sourceDataLine == null ) {

return;

}

this.sourceDataLine.flush();

this.sourceDataLine.start();

this.providingRingBack = true;

}

| void audio.AudioInterfacePCM.stopPlay | ( | ) | [virtual] |

Stops the audio output worker thread.

Implements audio.AudioInterface.

Definition at line 1233 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.callLength, audio.AudioInterfacePCM.jitBufFudge, audio.AudioInterfacePCM.jitBufGet, audio.AudioInterfacePCM.jitBufPut, audio.AudioInterfacePCM.playerIsEnabled, and audio.AudioInterfacePCM.sourceDataLine.

Referenced by audio.AbstractCODEC.stopPlay().

{

/* Reset the buffer

*/

this.jitBufPut = 0;

this.jitBufGet = 0;

this.playerIsEnabled = false;

if ( this.sourceDataLine == null ) {

return;

}

Log.audio( "Stopped playing" );

this.sourceDataLine.stop ();

if ( this.jitBufFudge != 0 )

{

Log.audio( "Total sample skew: " + this.jitBufFudge );

Log.audio( "Percentage: " + (100.0 * this.jitBufFudge / (8 * this.callLength)) );

this.jitBufFudge = 0;

}

if ( this.callLength > 0 ) {

Log.trace( "Total call Length: " + this.callLength + " ms" );

}

this.sourceDataLine.flush ();

}

| void audio.AudioInterfacePCM.stopRecording | ( | ) | [virtual] |

Stops the audio recording worker thread.

Implements audio.AudioInterface.

Definition at line 1146 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.audioSender, audio.AudioInterfacePCM.micRecorderThread, and audio.AudioInterfacePCM.targetDataLine.

Referenced by audio.AbstractCODEC.stopRecording().

{

if ( this.targetDataLine == null ) {

return;

}

Log.audio( "Stopped recoring ");

this.targetDataLine.stop ();

this.micRecorderThread = null;

this.audioSender = null;

}

| void audio.AudioInterfacePCM.stopRinging | ( | ) | [virtual] |

Stops ringing singnal.

Implements audio.AudioInterface.

Definition at line 1315 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.providingRingBack, audio.AudioInterfacePCM.ringTimer, and audio.AudioInterfacePCM.sourceDataLine.

Referenced by audio.AbstractCODEC.stopRinging().

{

if ( this.sourceDataLine == null ) {

return;

}

if ( this.providingRingBack )

{

this.providingRingBack = false;

this.ringTimer = -1;

this.sourceDataLine.stop();

this.sourceDataLine.flush();

}

}

| void audio.AudioInterfacePCM.writeBuffered | ( | byte[] | buff, |

| long | timestamp | ||

| ) | throws IOException [virtual] |

Enqueue packet for playing into de-jitter buffer.

Implements audio.AudioInterface.

Definition at line 524 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.FRAME_INTERVAL, audio.AudioBuffer.getByteArray(), audio.AudioInterfacePCM.jitBufPut, audio.AudioInterfacePCM.playBuffer, audio.AudioInterfacePCM.propertyStereoRec, audio.AudioBuffer.setTimestamp(), audio.AudioBuffer.setWritten(), and audio.AudioInterfacePCM.sourceDataLine.

Referenced by audio.AbstractCODEC.writeBuffered().

{

if ( this.sourceDataLine == null ) {

return;

}

int fno = (int) ( timestamp / (AudioInterfacePCM.FRAME_INTERVAL ) );

AudioBuffer ab = this.playBuffer[ fno % this.playBuffer.length ];

byte nbuff[] = ab.getByteArray ();

if ( propertyStereoRec )

{

for ( int i = 0; i < nbuff.length / 4; ++i )

{

nbuff[i * 4] = 0; // Left silent

nbuff[i * 4 + 1] = 0; // Left silent

nbuff[i * 4 + 2] = buff[i * 2];

nbuff[i * 4 + 3] = buff[i * 2 + 1];

}

}

else

{

System.arraycopy( buff, 0, nbuff, 0, nbuff.length );

}

ab.setWritten ();

ab.setTimestamp( timestamp );

this.jitBufPut = fno;

}

| long audio.AudioInterfacePCM.writeBuffersToAudioOutput | ( | ) | [private] |

Writes de-jittered audio frames to audio output.

Definition at line 339 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.callLength, audio.AudioInterfacePCM.concealMissingDataForAudioOutput(), audio.AudioInterfacePCM.deltaTimePlayerMinusMic, audio.AudioInterfacePCM.FRAME_COUNT, audio.AudioInterfacePCM.FRAME_INTERVAL, audio.AudioBuffer.getByteArray(), audio.AudioBuffer.getTimestamp(), audio.AudioBuffer.isWritten(), audio.AudioInterfacePCM.jitBufFirst, audio.AudioInterfacePCM.jitBufFudge, audio.AudioInterfacePCM.jitBufGet, audio.AudioInterfacePCM.jitBufPut, audio.AudioInterfacePCM.lastMicTimestamp, audio.AudioInterfacePCM.LLBS, audio.AudioInterfacePCM.playBuffer, audio.AudioInterfacePCM.playerIsEnabled, audio.AudioBuffer.setRead(), audio.AudioInterfacePCM.sourceDataLine, and audio.AudioInterfacePCM.startPlay().

Referenced by audio.AudioInterfacePCM.audioPlayerWorker().

{

if ( this.sourceDataLine == null ) {

return 0;

}

int top = this.jitBufPut;

if ( top - this.jitBufGet > this.playBuffer.length )

{

if ( this.jitBufGet == 0 ) {

this.jitBufGet = top;

} else {

this.jitBufGet = top - this.playBuffer.length / 2;

}

}

if ( ! this.playerIsEnabled )

{

/* We start when we have half full the buffers, FRAME_COUNT is

* usable buffer cap, size is twice that to keep history for AEC

*/

if ( top - this.jitBufGet >= ( FRAME_COUNT + LLBS ) / 2 )

{

startPlay ();

this.jitBufFirst = true;

}

else

{

return FRAME_INTERVAL;

}

}

int sz = 320;

boolean fudgeSynch = true;

int frameSize = this.sourceDataLine.getFormat().getFrameSize ();

for ( ; this.jitBufGet <= top; ++this.jitBufGet )

{

AudioBuffer ab = this.playBuffer[ this.jitBufGet % this.playBuffer.length ];

byte[] obuff = ab.getByteArray ();

int avail = this.sourceDataLine.available() / (obuff.length + 2);

sz = obuff.length;

/* Take packet this.jitBufGet if available

* Dejitter capacity: top - this.jitBufGet

*/

if ( avail > 0 )

{

if ( ! ab.isWritten () ) // Missing packet

{

/* Flag indicating whether we decide to conceal

* vs to wait for missing data

*/

boolean concealMissingBuffer = false;

if ( avail > LLBS - 2 ) {

// Running out of sound

concealMissingBuffer = true;

}

if ( ( top - this.jitBufGet ) >= ( this.playBuffer.length - 2 ) ) {

// Running out of buffers

concealMissingBuffer = true;

}

if ( this.jitBufGet == 0 ) {

// No data to conceal with

concealMissingBuffer = false;

}

/* Now conceal missing data or wait for it

*/

if ( concealMissingBuffer ) {

concealMissingDataForAudioOutput(this.jitBufGet);

} else {

break; // Waiting for missing data

}

}

int start = 0;

int len = obuff.length;

/* We do adjustments only if we have a timing reference from mic

*/

if ( fudgeSynch && this.lastMicTimestamp > 0

&& this.lastMicTimestamp != Long.MAX_VALUE)

{

/* Only one per writeBuff call cause we depend on this.lastMicTime

*/

fudgeSynch = false;

long delta = ab.getTimestamp() - this.lastMicTimestamp;

if ( this.jitBufFirst )

{

this.deltaTimePlayerMinusMic = delta;

this.jitBufFirst = false;

}

else

{

/* If diff is positive, this means that the source clock is

* running faster than the audio clock so we lop a few bytes

* off and make a note of the fudge factor.

* If diff is negative, this means the audio clock is faster

* than the source clock so we make up a couple of samples

* and note down the fudge factor.

*/

int diff = (int) ( delta - this.deltaTimePlayerMinusMic );

/* We expect the output buffer to be full

*/

int max = (int) Math.round( (LLBS / 2) * FRAME_INTERVAL);

if ( Math.abs(diff) > FRAME_INTERVAL ) {

// "Delta = " + delta + " diff =" + diff );

}

if ( diff > max ) {

start = (diff > (LLBS * FRAME_INTERVAL)) ?

frameSize * 2 : frameSize; // panic ?

len -= start;

// Snip: start / frameSize sample(s)

this.jitBufFudge -= start / frameSize;

}

if (diff < -1 * FRAME_INTERVAL) {

this.sourceDataLine.write( obuff, 0, frameSize );

// Paste: added a sample

this.jitBufFudge += 1;

}

}

}

/* Now write data to audio output and mark audio buffer 'read'

*/

this.sourceDataLine.write( obuff, start, len );

this.callLength += FRAME_INTERVAL;

ab.setRead ();

}

else // No place for (more?) data in SDLB

{

break;

}

}

long ttd = ( ( sz * LLBS / 2 ) - this.sourceDataLine.available() ) / 8;

return ttd;

}

| long audio.AudioInterfacePCM.writeDirectIfAvail | ( | byte[] | samples ) | [private] |

Writes audio samples to audio output directly (without using jitter buffer).

- Returns:

- milliseconds to sleep (after which time next write should occur)

Definition at line 862 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.sourceDataLine.

Referenced by audio.AudioInterfacePCM.ringerWorker().

{

if ( this.sourceDataLine == null ) {

return 0;

}

if ( this.sourceDataLine.available () > samples.length ) {

this.sourceDataLine.write( samples, 0, samples.length );

}

long nap = ( samples.length * 2 - this.sourceDataLine.available () ) / 8;

return nap;

}

| void audio.AudioInterfacePCM.writeDirectly | ( | byte[] | buff ) | [virtual] |

Writes directly to source line without buffering.

Implements audio.AudioInterface.

Definition at line 511 of file AudioInterfacePCM.java.

References audio.AudioInterfacePCM.sourceDataLine.

Referenced by audio.AbstractCODEC.writeDirectly().

{

if ( this.sourceDataLine == null ) {

return;

}

this.sourceDataLine.write( buff, 0, buff.length );

}

Member Data Documentation

volatile Thread audio.AudioInterfacePCM.audioPlayerThread = null [private] |

Definition at line 82 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM(), audio.AudioInterfacePCM.audioPlayerWorker(), and audio.AudioInterfacePCM.cleanUp().

volatile Packetizer audio.AudioInterfacePCM.audioSender = null [private] |

Definition at line 69 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.sendAudioFrame(), audio.AudioInterfacePCM.setAudioSender(), and audio.AudioInterfacePCM.stopRecording().

volatile Thread audio.AudioInterfacePCM.audioSenderThread = null [private] |

Definition at line 67 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM(), audio.AudioInterfacePCM.cleanUp(), and audio.AudioInterfacePCM.pduSenderWorker().

long audio.AudioInterfacePCM.callLength = 0 [private] |

Measured call length in milliseconds.

Definition at line 95 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.startPlay(), audio.AudioInterfacePCM.stopPlay(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

long audio.AudioInterfacePCM.deltaTimePlayerMinusMic = 0 [private] |

Definition at line 92 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.writeBuffersToAudioOutput().

final int audio.AudioInterfacePCM.FRAME_COUNT = 10 [static, private] |

Audio buffering depth in number of frames.

Definition at line 33 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.writeBuffersToAudioOutput().

final int audio.AudioInterfacePCM.FRAME_INTERVAL = 20 [static, private] |

Frame interval in milliseconds.

Definition at line 39 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.audioPlayerWorker(), audio.AudioInterfacePCM.getAudioIn(), audio.AudioInterfacePCM.getAudioOut(), audio.AudioInterfacePCM.getSampleSize(), audio.AudioInterfacePCM.pduSenderWorker(), audio.AudioInterfacePCM.ringerWorker(), audio.AudioInterfacePCM.writeBuffered(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

boolean audio.AudioInterfacePCM.jitBufFirst = true [private] |

Definition at line 90 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.writeBuffersToAudioOutput().

long audio.AudioInterfacePCM.jitBufFudge = 0 [private] |

Definition at line 89 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.startPlay(), audio.AudioInterfacePCM.stopPlay(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

int audio.AudioInterfacePCM.jitBufGet = 0 [private] |

Definition at line 88 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.startPlay(), audio.AudioInterfacePCM.stopPlay(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

int audio.AudioInterfacePCM.jitBufPut = 0 [private] |

Definition at line 87 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.startPlay(), audio.AudioInterfacePCM.stopPlay(), audio.AudioInterfacePCM.writeBuffered(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

long audio.AudioInterfacePCM.lastMicTimestamp = 0 [private] |

Definition at line 76 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.micDataRead(), audio.AudioInterfacePCM.startRecording(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

final int audio.AudioInterfacePCM.LLBS = 6 [static, private] |

Low-level water mark used for de-jittering.

Definition at line 36 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.getAudioIn(), audio.AudioInterfacePCM.getAudioOut(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

int audio.AudioInterfacePCM.micBufGet = 0 [private] |

Definition at line 75 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.readWithTimestamp(), and audio.AudioInterfacePCM.startRecording().

int audio.AudioInterfacePCM.micBufPut = 0 [private] |

Definition at line 74 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.micDataRead(), audio.AudioInterfacePCM.readWithTimestamp(), and audio.AudioInterfacePCM.startRecording().

volatile Thread audio.AudioInterfacePCM.micRecorderThread = null [private] |

Definition at line 68 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.cleanUp(), audio.AudioInterfacePCM.micRecorderWorker(), audio.AudioInterfacePCM.startRecording(), and audio.AudioInterfacePCM.stopRecording().

AudioFormat audio.AudioInterfacePCM.mono44k [private] |

Definition at line 61 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM(), and audio.AudioInterfacePCM.getAudioIn().

AudioFormat audio.AudioInterfacePCM.mono8k [private] |

Definition at line 59 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM(), audio.AudioInterfacePCM.getAudioIn(), audio.AudioInterfacePCM.getAudioOut(), and audio.AudioInterfacePCM.getSampleSize().

AudioBuffer [] audio.AudioInterfacePCM.playBuffer = new AudioBuffer[ FRAME_COUNT + FRAME_COUNT ] [private] |

Definition at line 86 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.concealMissingDataForAudioOutput(), audio.AudioInterfacePCM.getAudioOut(), audio.AudioInterfacePCM.writeBuffered(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

boolean audio.AudioInterfacePCM.playerIsEnabled = false [private] |

Definition at line 91 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.startPlay(), audio.AudioInterfacePCM.stopPlay(), and audio.AudioInterfacePCM.writeBuffersToAudioOutput().

boolean audio.AudioInterfacePCM.propertyBigBuff = false [private] |

Big buffers.

Definition at line 48 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.getAudioIn(), and audio.AudioInterfacePCM.getAudioOut().

String audio.AudioInterfacePCM.propertyInputDeviceName = null [private] |

Input device name.

Definition at line 51 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.getAudioIn().

String audio.AudioInterfacePCM.propertyOutputDeviceName = null [private] |

Output device name.

Definition at line 54 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.getAudioOut().

boolean audio.AudioInterfacePCM.propertyStereoRec = false [private] |

Stereo recording.

Definition at line 45 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.getAudioIn(), audio.AudioInterfacePCM.getAudioOut(), and audio.AudioInterfacePCM.writeBuffered().

boolean audio.AudioInterfacePCM.providingRingBack = false [private] |

Definition at line 104 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.ringerWorker(), audio.AudioInterfacePCM.startRinging(), and audio.AudioInterfacePCM.stopRinging().

AudioBuffer [] audio.AudioInterfacePCM.recordBuffer = new AudioBuffer[ FRAME_COUNT ] [private] |

Definition at line 73 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.getAudioIn(), audio.AudioInterfacePCM.micDataRead(), audio.AudioInterfacePCM.readWithTimestamp(), and audio.AudioInterfacePCM.startRecording().

volatile Thread audio.AudioInterfacePCM.ringerThread = null [private] |

Definition at line 100 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM(), audio.AudioInterfacePCM.cleanUp(), and audio.AudioInterfacePCM.ringerWorker().

byte [] audio.AudioInterfacePCM.ringSamples = null [private] |

Definition at line 101 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.initializeRingerSamples(), and audio.AudioInterfacePCM.ringerWorker().

long audio.AudioInterfacePCM.ringTimer = -1 [private] |

Definition at line 105 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.ringerWorker(), and audio.AudioInterfacePCM.stopRinging().

byte [] audio.AudioInterfacePCM.silenceSamples = null [private] |

Definition at line 102 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.initializeRingerSamples(), audio.AudioInterfacePCM.readWithTimestamp(), and audio.AudioInterfacePCM.ringerWorker().

SourceDataLine audio.AudioInterfacePCM.sourceDataLine = null [private] |

Definition at line 81 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM(), audio.AudioInterfacePCM.audioPlayerWorker(), audio.AudioInterfacePCM.cleanUp(), audio.AudioInterfacePCM.getAudioOut(), audio.AudioInterfacePCM.ringerWorker(), audio.AudioInterfacePCM.startPlay(), audio.AudioInterfacePCM.startRinging(), audio.AudioInterfacePCM.stopPlay(), audio.AudioInterfacePCM.stopRinging(), audio.AudioInterfacePCM.writeBuffered(), audio.AudioInterfacePCM.writeBuffersToAudioOutput(), audio.AudioInterfacePCM.writeDirectIfAvail(), and audio.AudioInterfacePCM.writeDirectly().

AudioFormat audio.AudioInterfacePCM.stereo8k [private] |

Definition at line 60 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM(), audio.AudioInterfacePCM.getAudioIn(), and audio.AudioInterfacePCM.getAudioOut().

TargetDataLine audio.AudioInterfacePCM.targetDataLine = null [private] |

Definition at line 66 of file AudioInterfacePCM.java.

Referenced by audio.AudioInterfacePCM.AudioInterfacePCM(), audio.AudioInterfacePCM.cleanUp(), audio.AudioInterfacePCM.getAudioIn(), audio.AudioInterfacePCM.micDataRead(), audio.AudioInterfacePCM.micRecorderWorker(), audio.AudioInterfacePCM.pduSenderWorker(), audio.AudioInterfacePCM.startRecording(), and audio.AudioInterfacePCM.stopRecording().

The documentation for this class was generated from the following file:

- audio/AudioInterfacePCM.java

1.7.2

1.7.2